The first time I watched an autonomous test vehicle navigate an unexpected obstacle course—a child’s ball rolling into the street followed by a dog chasing it, followed by the child running after both—something crystallized about the enormity of what safety engineers are trying to accomplish. The vehicle stopped appropriately, but the sequence of decisions required in those 2.3 seconds involved more computational complexity than I’d fully appreciated until seeing it happen in real-world conditions.

That was 2019, at a testing facility in Arizona. I was consulting on sensor validation protocols for an automotive supplier, and that moment of watching machine perception attempt to match human intuition shaped my understanding of what these safety systems actually have to do—and how far they still have to go.

Since then, I’ve spent considerable time working with and evaluating the AI tools that underpin autonomous vehicle safety. The landscape has evolved dramatically. What seemed like science fiction a decade ago is now deployed in millions of vehicles on public roads. Yet the gap between current capabilities and full autonomy remains significant, and understanding the tools that bridge that gap matters for everyone involved in this space.

This is a detailed examination of the AI tools actually being used to make autonomous and semi-autonomous vehicles safer—what they do, how well they work, and where the field is heading.

Understanding the Safety Challenge

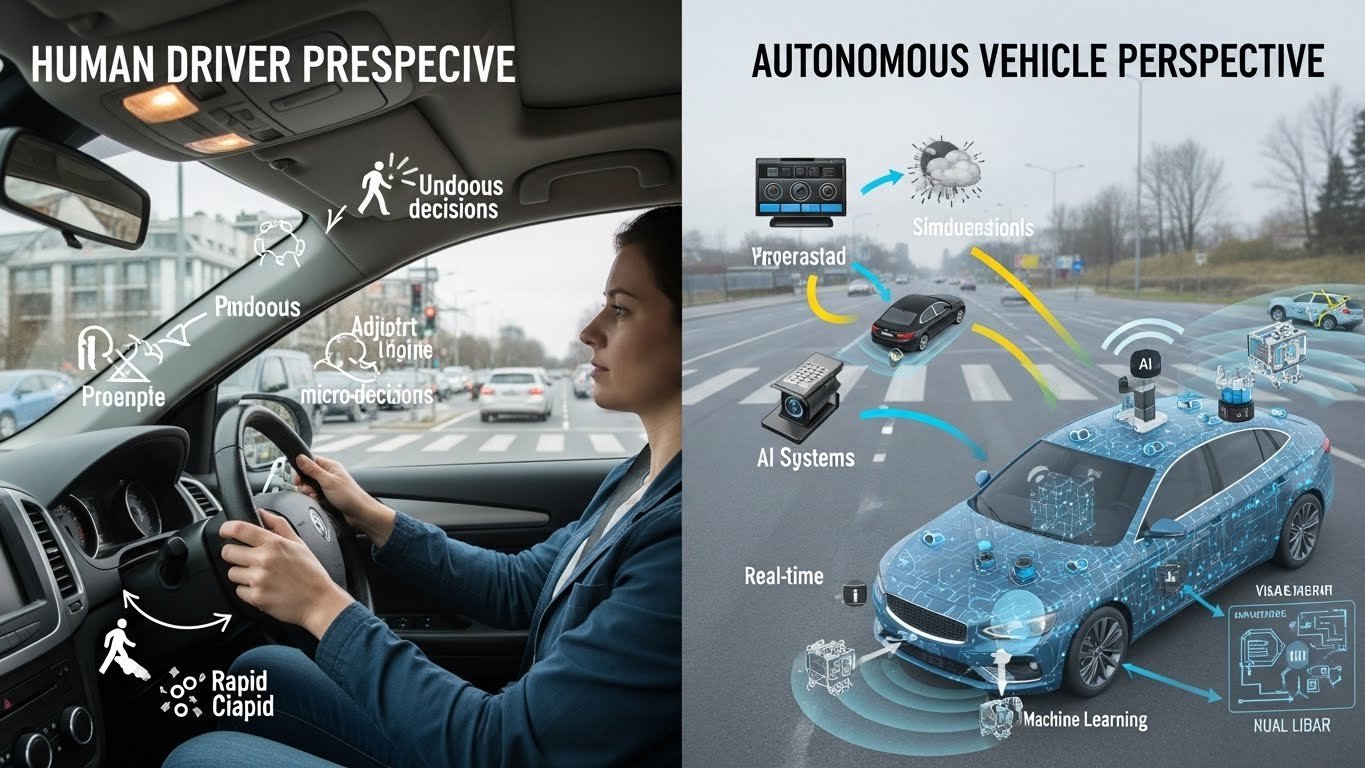

Before diving into specific tools, it’s worth appreciating the scale of what autonomous vehicle safety requires. A human driver makes thousands of micro-decisions during even a short trip, most of them unconsciously. We process visual information, predict other drivers’ behavior, adjust for weather and road conditions, and respond to unexpected events in fractions of seconds.

Replicating this through artificial intelligence requires solving several interconnected problems simultaneously:

Perception: Understanding what’s happening around the vehicle through sensors—cameras, radar, lidar, ultrasonics—and fusing that data into a coherent picture of the environment.

Prediction: Anticipating what other road users will do next. Will that pedestrian step off the curb? Will the car in the next lane change lanes?

Planning: Deciding what the vehicle should do in response—speed up, slow down, change lanes, stop entirely—considering both immediate safety and longer-term route goals.

Control: Executing those decisions through precise manipulation of steering, acceleration, and braking.

Validation: Ensuring all of the above works reliably across the nearly infinite variety of real-world driving conditions.

Each of these areas has spawned specialized AI tools, and the best autonomous vehicle safety depends on excellence across all of them.

Perception and Sensor Fusion Platforms

Perception forms the foundation of autonomous vehicle safety. If the system can’t accurately detect and classify objects in the environment, everything downstream fails.

NVIDIA DRIVE

NVIDIA’s DRIVE platform has become something of an industry standard for perception processing. The hardware—particularly the DRIVE Orin and newer DRIVE Thor systems-on-chip—provides the computational power necessary for running sophisticated neural networks in real-time.

What makes DRIVE particularly valuable for safety applications is the comprehensive software stack built on this hardware. DriveWorks provides sensor abstraction and fusion capabilities that handle the messy reality of combining data from multiple camera, radar, and lidar sensors. Each sensor type has different characteristics—cameras provide rich visual detail but struggle in low light; radar works in all conditions but has lower resolution; lidar provides precise depth information but can be affected by weather. Fusing these into a unified environmental model is non-trivial.

I’ve worked with development teams using DRIVE for ADAS (Advanced Driver Assistance Systems) features, and the maturity of the platform shows. The pre-trained perception models for object detection, lane marking recognition, and traffic sign reading provide a strong starting point. More importantly for safety-critical applications, NVIDIA has invested heavily in functional safety certification—DRIVE Orin achieved ASIL-D certification (the highest automotive safety integrity level) for its computing elements.

The limitation? Cost and power consumption. DRIVE Thor promises to deliver 2,000 TOPS (trillion operations per second) but requires significant power and cooling. For lower-level ADAS features, it’s overkill. For L4 autonomous systems, it’s becoming table stakes.

Mobileye EyeQ

Intel’s Mobileye takes a different approach that’s worth understanding. Where NVIDIA provides a platform for development, Mobileye offers more complete, validated solutions—particularly for production ADAS features.

The EyeQ family of chips, now in its sixth generation, has been deployed in over 125 million vehicles. This scale provides a significant advantage: real-world data from massive fleet deployment feeds continuous improvement. Mobileye’s REM (Road Experience Management) crowdsources map data from vehicles running their systems, creating continuously updated road models that support safety features.

For production ADAS—automatic emergency braking, lane keeping assist, adaptive cruise control—Mobileye’s turnkey approach reduces development time and safety validation burden. The company has done the work of certifying perception algorithms for production use.

I’ve observed that OEMs often use Mobileye for production ADAS while using NVIDIA for autonomous development programs. The tools serve different points on the development-to-production spectrum.

Qualcomm Snapdragon Ride

Qualcomm entered the automotive AI space more recently but has gained ground quickly. The Snapdragon Ride platform emphasizes scalability—the same architecture scales from basic ADAS through L2+ features and into L4 autonomous applications.

What distinguishes Snapdragon Ride for safety applications is the integration of connectivity features. Qualcomm’s heritage in mobile communications translates into strong V2X (vehicle-to-everything) capabilities, enabling safety features that depend on communication between vehicles and infrastructure. Intersection collision warnings, emergency vehicle alerts, and road hazard notifications all benefit from this connectivity focus.

The platform’s power efficiency also matters for safety. Systems that overheat or drain batteries create their own safety concerns. Snapdragon Ride’s mobile-derived architecture tends toward better power efficiency than competitors.

Prediction and Planning Systems

Perception tells you what’s there. Prediction tells you what it’s going to do. This is where safety gets particularly challenging—predicting the behavior of other road users requires understanding human intentions in ways that current AI still struggles with.

Waymo’s Behavior Prediction Systems

Waymo doesn’t sell its prediction tools commercially, but understanding their approach illuminates the state of the art. Their published research on behavior prediction—using transformer architectures to model multi-agent interactions—has influenced the broader industry.

The key insight from Waymo’s work: prediction isn’t just about individual actors but about how actors influence each other. A pedestrian’s decision to cross depends on what cars are doing. A car’s lane change depends on the gap between other vehicles. Modeling these interactions requires architectures that consider joint distributions of future behaviors.

Waymo’s testing in San Francisco, Phoenix, and other cities has generated prediction datasets that reveal just how complex urban driving is. Edge cases aren’t edge cases when you’re driving millions of miles—they’re everyday occurrences. Their prediction systems have evolved to handle scenarios that seemed impossibly complex five years ago.

Applied Intuition

Applied Intuition has emerged as a significant player in simulation and prediction tools for autonomous vehicle development. Their platform enables developing and testing prediction algorithms against vast libraries of scenarios derived from real-world driving data.

What makes Applied Intuition valuable for safety development is the scenario generation capability. Rather than testing against only scenarios that have actually occurred, their tools can generate plausible variations—what if the pedestrian had been walking faster? What if there had been a second vehicle? This synthetic expansion of test coverage matters enormously for safety validation.

I’ve seen Applied Intuition used to identify failure modes that would have been nearly impossible to discover through road testing alone. The combination of data-driven scenario generation with high-fidelity simulation creates a powerful tool for safety engineers.

Foretellix

Foretellix approaches autonomous vehicle safety from a verification and validation perspective. Their Foretify platform uses coverage-driven verification—a methodology borrowed from semiconductor validation—to systematically test autonomous vehicle behavior across defined operational domains.

The key insight: you can’t test everything, so you need principled methods for covering the space of possible scenarios efficiently. Foretellix’s tools help define what coverage means for autonomous vehicle safety and measure whether testing has achieved adequate coverage.

For safety certification purposes, this methodology matters. Regulators will eventually require demonstrating that autonomous vehicles have been tested sufficiently. Having systematic tools for defining and measuring test coverage provides the evidence base that regulatory acceptance will require.

Simulation and Validation Platforms

Real-world testing is essential but insufficient for autonomous vehicle safety validation. The mathematics are unforgiving: if a fatal accident occurs roughly once per 100 million miles of human driving, and you want to demonstrate that your autonomous system is safer than humans, you need to drive billions of miles—or find other ways to build confidence.

Simulation has become the primary tool for expanding test coverage beyond what physical testing can achieve.

NVIDIA DRIVE Sim

NVIDIA DRIVE Sim builds on their Omniverse platform to create physically accurate simulation environments for autonomous vehicle testing. The emphasis on physical accuracy matters—simulated sensors need to behave like real sensors, including their limitations and failure modes, for simulation results to predict real-world safety.

DRIVE Sim’s integration with the DRIVE development platform creates a coherent workflow from development through simulation to deployment. Algorithms developed using DRIVE can be tested in DRIVE Sim with confidence that simulated results will transfer to physical vehicles.

The platform supports hardware-in-the-loop testing, where actual vehicle computers run within simulated environments. This catches implementation issues that pure software simulation might miss—timing problems, memory constraints, and hardware-software interactions that affect real-world behavior.

Cognata

Cognata focuses specifically on autonomous vehicle simulation with emphasis on safety-critical testing. Their platform generates realistic urban environments, traffic patterns, and edge case scenarios for systematic safety validation.

What distinguishes Cognata is the attention to regulatory requirements. As standards like ISO 21448 (SOTIF—Safety of the Intended Functionality) and ISO 26262 (functional safety) mature, simulation tools need to support compliance demonstration. Cognata’s tools generate the documentation and traceability that safety certification requires.

I’ve worked with teams using Cognata to validate ADAS features for production vehicles. The ability to create repeatable test scenarios—running the same simulated near-miss hundreds of times with small variations—provides confidence that production software handles edge cases appropriately.

CARLA

CARLA (Car Learning to Act) deserves mention as an open-source alternative to commercial simulation platforms. Developed initially by researchers at Intel Labs and the Computer Vision Center in Barcelona, CARLA has become a common platform for academic research and early-stage development.

For safety research specifically, CARLA’s openness enables experimentation that commercial platforms might not support. Custom sensors, unusual vehicle configurations, and experimental algorithms can be tested without licensing constraints.

The limitation is production readiness. CARLA lacks the validation and certification support that commercial platforms provide. It’s a research tool, not a production tool—but the research it enables often influences production systems.

Metamoto (now part of Ansys)

Ansys’s acquisition of Metamoto brought autonomous vehicle simulation into a broader safety engineering ecosystem. Ansys has deep experience in safety-critical system simulation for aerospace and other industries; applying that expertise to autonomous vehicles creates interesting possibilities.

The integration of autonomous vehicle simulation with Ansys’s functional safety tools enables system-level safety analysis that considers both the AI components and traditional vehicle systems. Understanding how AI safety features interact with conventional vehicle dynamics, structural safety, and occupant protection requires tools that span these domains.

Edge Case Detection and Robustness Testing

Real-world driving presents situations that developers never anticipated. Tools that systematically discover edge cases and test system robustness against them are essential for autonomous vehicle safety.

DeepTest and Related Research Tools

Academic research on adversarial testing for autonomous vehicles has produced tools that identify inputs causing system failures. DeepTest, developed at Columbia University, generates test images that are realistic but challenging—poor lighting conditions, unusual camera angles, and environmental effects that stress perception systems.

While not production tools themselves, these research platforms have influenced commercial offerings. The insight that autonomous systems need testing specifically designed to find their weaknesses—not just testing against typical conditions—has become standard practice.

Scale AI

Scale AI provides data labeling services that have become crucial for training and validating perception systems. Accurate labels on training data directly affect safety; mislabeled training examples can create blindspots in deployed systems.

Scale’s focus on quality and their experience with safety-critical labeling (including work for defense and aviation clients) translates into appropriate rigor for autonomous vehicle applications. Their Edge Case Discovery tools help identify unusual situations in driving data that deserve special attention.

For safety validation specifically, Scale’s ability to handle large volumes of data with consistent quality enables the scale of testing that autonomous vehicle safety requires.

Parallel Domain

Parallel Domain focuses on synthetic data generation—creating realistic simulated driving images and scenarios for training and testing perception systems. This matters for safety because real-world data inevitably has gaps.

Consider rare events like debris in the road, animals crossing, or unusual vehicle configurations (oversized loads, vehicles being towed). These occur rarely enough that collecting adequate real-world examples is difficult. Synthetic data generation can fill these gaps, ensuring perception systems are trained on comprehensive representations of possible scenarios.

The quality of synthetic data matters enormously. Poorly generated synthetic data can create artifacts that don’t exist in the real world, potentially confusing systems trained on it. Parallel Domain’s emphasis on photorealistic generation with physically accurate sensor simulation addresses this concern.

Functional Safety and Certification Tools

As autonomous vehicle features move toward production, functional safety tools that ensure compliance with automotive safety standards become essential.

Vector Informatik

Vector has long provided tools for automotive software development, and their CANoe and vTESTstudio products have been adapted for ADAS and autonomous vehicle testing. The tools support simulation, testing, and validation workflows that connect to established functional safety processes.

For safety-critical automotive development, Vector’s tools integrate with requirements management, design, and certification workflows. This integration matters because safety is a systems engineering discipline—individual components might work perfectly while system-level safety fails. Tools that support systematic safety analysis across development lifecycle stages help prevent such failures.

dSPACE

dSPACE specializes in hardware-in-the-loop testing for automotive systems. Their SCALEXIO and VEOS platforms enable testing actual vehicle ECUs in simulated environments, catching implementation issues that pure simulation might miss.

For autonomous vehicle safety specifically, dSPACE’s ASM (Automotive Simulation Models) suite provides validated vehicle dynamics models that support testing of integrated systems. Understanding how safety features interact with vehicle dynamics—how automatic braking affects stability, how steering interventions influence handling—requires physically accurate models.

Ansys medini analyze

Ansys medini analyze specifically targets functional safety analysis according to automotive standards. The tool supports systematic identification of hazards, safety goal definition, and allocation of safety requirements to components.

For autonomous vehicles, where AI components complicate traditional safety analysis, medini analyze’s approach to modeling system behavior and failure modes provides necessary rigor. The tool’s support for SOTIF analysis is particularly relevant—understanding safety limitations of the intended functionality, even when everything is working correctly, is central to autonomous vehicle safety.

Real-Time Monitoring and Runtime Safety

Safety doesn’t end at deployment. Monitoring systems in operation and ensuring runtime safety requires additional tools.

Helm.ai

Helm.ai focuses on deep learning systems that can be deployed efficiently on vehicle hardware while maintaining safety properties. Their emphasis on unsupervised learning reduces dependence on labeled data, which has safety implications—systems aren’t limited to scenarios similar to training data.

For runtime safety specifically, Helm.ai’s architecture enables uncertainty estimation—the system knows when it’s encountering situations outside its competence. This self-awareness enables appropriate fallback behaviors when confidence is low.

Elektrobit

Elektrobit’s EB robinos product provides a middleware layer for autonomous vehicle software that includes runtime monitoring capabilities. The platform can detect system anomalies, manage graceful degradation when components fail, and ensure that safety-critical functions continue operating even when other systems have problems.

This runtime safety layer matters because autonomous vehicles are complex systems where failures are inevitable. How the system behaves when something goes wrong is as important as how it behaves when everything works.

Integration and Development Platforms

Individual tools must work together within coherent development environments. Several platforms attempt to integrate the autonomous vehicle development workflow.

Apex.AI

Apex.AI provides a certified middleware for autonomous vehicles—essentially the software infrastructure that connects perception, prediction, planning, and control components. Their Apex.OS product is designed for functional safety certification, addressing the challenge of building safety-critical systems from multiple components.

For safety-critical development, having certified infrastructure matters. Safety certification is compositional—you can only achieve system-level safety if component-level safety properties compose appropriately. Apex.AI’s focus on certified infrastructure supports this compositional approach.

Autoware Foundation

Autoware provides open-source software for autonomous vehicles that has been adopted by multiple automotive manufacturers and suppliers. While not a commercial product, Autoware’s role in establishing common interfaces and enabling collaboration across the industry has safety implications.

Standardization on common platforms enables shared learning about safety issues. When multiple organizations use similar software, vulnerabilities discovered by one can be addressed by all. This differs from proprietary approaches where each organization learns (and fails) independently.

The Autoware Foundation’s work on safety standards for autonomous vehicle software aims to bring the rigor of traditional automotive safety engineering to open-source development processes.

Practical Considerations for Tool Selection

Having worked with organizations at various stages of autonomous vehicle development, patterns emerge about effective tool selection.

Match Tools to Development Stage

Early-stage research benefits from flexible, open platforms that enable experimentation. Production development requires certified tools with appropriate documentation and support. Using research tools for production development creates technical debt; using production tools for research slows innovation.

I’ve seen organizations struggle when they failed to anticipate tool evolution needs. Starting with tools that can’t scale to production requirements forces painful migrations. Starting with production tools for early research bogs down exploration with unnecessary process overhead.

Consider Ecosystem Integration

Autonomous vehicle development requires many tools to work together. Sensor data must flow into perception systems, perception into planning, planning into simulation. Tools that integrate smoothly enable efficient workflows; tools that don’t create friction and potential for errors.

The major platform providers (NVIDIA, Qualcomm, Mobileye) offer integrated ecosystems. Point solutions in specific areas may be superior technically but create integration burden. The tradeoff between best-in-class components and integrated platforms depends on organizational capability and priorities.

Assess Safety Certification Status

For production autonomous vehicle features, tool certification status matters. Regulatory acceptance will increasingly depend on evidence that safety-relevant development used appropriately qualified tools. Investing in tool qualification now avoids problems later.

This applies not just to final validation tools but throughout the development chain. Training data quality, simulation fidelity, and development process rigor all affect system-level safety. Tools that support demonstrating appropriate rigor at each stage provide long-term value.

Plan for Long-Term Support

Autonomous vehicle programs span many years. Tools selected today will be used for development, production, and service support for vehicles with 15+ year lifespans. Tool vendor stability, commitment to automotive markets, and support for legacy versions all matter.

I’ve seen projects disrupted by tool discontinuation, licensing changes, or loss of vendor support. Evaluating vendors as long-term partners rather than just current products reduces this risk.

Limitations and Honest Challenges

Despite sophisticated tools, autonomous vehicle safety remains genuinely difficult. Several challenges merit honest acknowledgment.

The Long Tail Problem

Autonomous vehicles must handle not just common situations but the vast tail of unusual events. A human driver might encounter a ladder falling off a truck once in a lifetime; an autonomous fleet operating at scale will encounter it regularly. Tools that systematically explore this long tail of edge cases help, but the tail is effectively infinite.

No amount of simulation or testing can guarantee that all possible situations have been covered. Tools can increase confidence but cannot provide certainty. Remaining humble about what’s been achieved, while continuing to improve, is the appropriate posture.

Sensor Limitations

Current sensor technologies have fundamental limitations. Cameras struggle in low light and bad weather. Radar has limited resolution. Lidar can be affected by precipitation and atmospheric conditions. Sensor fusion helps, but no current sensor suite matches human perception in all conditions.

Tools can optimize performance given sensor limitations, but they can’t overcome those limitations. Safety strategies must account for situations where sensing is degraded or compromised.

AI Brittleness

Deep learning systems can fail in unexpected ways when encountering inputs sufficiently different from training data. The failure modes aren’t always predictable or graceful. A perception system that works perfectly on thousands of test scenarios can fail completely on a scenario just slightly outside its training distribution.

Tools for testing robustness and detecting out-of-distribution inputs help, but don’t fully solve the problem. Runtime monitoring and conservative fallback behaviors provide additional defense, but the fundamental brittleness of current AI systems limits the autonomy that can be safely deployed.

Human-Machine Interaction Challenges

Many current safety features rely on human oversight—drivers are expected to monitor systems and take over when necessary. Evidence suggests humans are poor at this task. Boredom, overconfidence, and distraction all undermine effective oversight.

Tools for monitoring driver attention and enforcing appropriate oversight help, but the fundamental challenge remains. Systems that require human oversight for safety, while making that oversight difficult to maintain, create dangerous situations.

Looking Ahead

The trajectory of autonomous vehicle safety tools points toward several developments:

Increased integration of development, simulation, and validation workflows will reduce friction and improve traceability from requirements through deployment.

Better synthetic data will enable training and testing on scenarios that rarely occur in the real world, improving coverage of the long tail.

Runtime adaptation will enable systems to improve continuously based on fleet experience rather than requiring periodic updates.

Regulatory frameworks will mature, creating clearer requirements for tool qualification and evidence of safety.

Standardization will increase as the industry converges on common approaches to sensor interfaces, safety requirements, and validation methodologies.

The tools described here represent the current state of a rapidly evolving field. What’s leading-edge today will be table stakes within a few years, as the industry moves toward higher levels of autonomy and the safety requirements that come with them.

Final Thoughts

Autonomous vehicle safety is neither a solved problem nor an impossible one. The tools available today enable safety features that genuinely save lives—automatic emergency braking, lane keeping assistance, and adaptive cruise control are preventing accidents that would otherwise occur. Progress toward higher levels of autonomy continues, supported by increasingly sophisticated tools.

But the gap between current capabilities and full autonomy remains substantial. The tools are necessary but not sufficient. Engineering judgment, systematic safety processes, and appropriate humility about what’s been achieved all remain essential.

The child, the ball, and the dog I watched the test vehicle navigate successfully? That was one scenario, one time, under controlled conditions. The production vehicles on public roads face infinite variations of that scenario, every day, with lives depending on getting it right. The tools I’ve described help—genuinely help—but the work of making autonomous vehicles truly safe continues.

For those of us working in this space, the responsibility is substantial and the tools are improving. But there’s no shortcut to safety. It’s earned through rigorous development, systematic validation, and honest acknowledgment of what we don’t yet know how to do.